In the rapidly expanding digital world, where billions of connected devices generate unimaginable volumes of data every second, traditional cloud-centric computing models are encountering new limits. This has paved the way for a transformative paradigm : Edge Computing and AI at the Edge. Instead of sending all data to distant data centers for processing, edge computing brings computational power closer to the data source, while AI at the edge embeds artificial intelligence directly onto devices and local servers. As of mid-2025, this powerful combination is redefining what’s possible in real-time decision-making, privacy, and operational efficiency across industries, from smart factories to autonomous vehicles.

What is Edge Computing?

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the sources of data. Instead of relying on a centralized cloud or a remote data center, edge computing processes data at the “edge” of the network, which can be anything from an IoT device, a local server in a factory, a retail store’s server, or even a cell tower.

The core idea is to minimize the distance data travels, thereby reducing latency, conserving bandwidth, and enabling real-time processing capabilities that are not feasible with cloud-only approaches.

Here’s a simple comparison of Edge vs. Cloud Computing:

What is AI at the Edge?

AI at the Edge refers to the deployment of Artificial Intelligence capabilities directly onto edge devices or local edge servers. This means that AI models—which are typically trained in powerful cloud environments—are then optimized and deployed to run inferencing (making predictions or decisions) locally.

Instead of sending sensor data, video feeds, or voice commands to a remote cloud for AI processing and then waiting for a response, AI at the edge enables:

- Local Inference: The AI model processes data and makes decisions right where the data is generated.

- Reduced Latency: Decisions happen instantly, critical for autonomous systems, robotics, and real-time security.

- Offline Capability: AI functions can continue even without continuous cloud connectivity.

- Enhanced Privacy: Sensitive data can be processed and analyzed locally, reducing the need to transmit it over networks.

This combination of Edge Computing and AI at the Edge is powerful because it merges the benefits of localized data processing with the intelligence of AI, unlocking new possibilities for automation and real-time responsiveness.

Why “Edge Computing and AI at the Edge” is Crucial Now

The confluence of Edge Computing and AI at the Edge is not merely a technological trend; it’s a strategic imperative driven by several factors:

- Explosive Data Growth: Billions of IoT devices (sensors, cameras, wearables) are generating Zettabytes of data daily. Sending all this raw data to the cloud is unsustainable in terms of bandwidth and cost. Processing at the edge filters and prioritizes data.

- Latency-Sensitive Applications: Many modern applications demand near-instantaneous responses. Autonomous vehicles need to react in milliseconds; robotic arms in factories require real-time precision; healthcare monitoring devices need immediate alerts. Cloud latency is often too high for these critical use cases.

- Bandwidth Constraints and Cost: Transmitting massive amounts of raw data to the cloud is expensive and consumes significant network bandwidth, particularly in remote areas or for large deployments (e.g., smart city cameras). Edge processing drastically reduces the data sent upstream.

- Privacy and Security Concerns: Processing sensitive data (e.g., patient health records, video surveillance) locally at the edge can enhance privacy by minimizing data exposure during transit and reducing the amount of sensitive data stored in centralized cloud environments.

- Reliability and Autonomy: For critical infrastructure, remote locations, or systems that need to operate continuously, relying solely on cloud connectivity can be a single point of failure. Edge computing allows operations to continue even during network outages, improving resilience.

These drivers underscore why Edge Computing and AI at the Edge are moving from niche applications to foundational elements of enterprise and industrial digital strategies.

Key Applications Across Industries

The synergistic power of Edge Computing and AI at the Edge is fueling innovation and unlocking new capabilities across a multitude of industries.

These diverse applications highlight how Edge Computing and AI at the Edge are enabling smarter, faster, and more responsive operations closer to where the action happens.

Benefits and Challenges of Edge AI Deployment

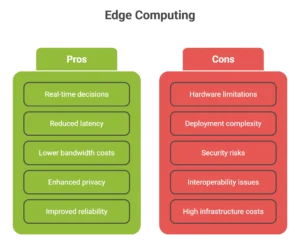

The transition to Edge Computing and AI at the Edge offers compelling advantages, but it also introduces a new set of complexities and challenges that organizations must carefully address.

Key Benefits :

- Real-time Decision-Making : Unlocks capabilities for instantaneous responses, critical for autonomous vehicles, industrial control, and emergency services.

- Reduced Latency : Processes data milliseconds after generation, avoiding delays associated with cloud round trips.

- Lower Bandwidth Costs : Filters and processes data locally, significantly reducing the amount of data transmitted to the cloud.

- Enhanced Data Privacy & Security : Sensitive data can be processed and analyzed on-site, limiting its exposure during transit and storage in centralized data centers.

- Improved Reliability & Autonomy : Systems can continue to function even with intermittent or no cloud connectivity, ensuring continuous operation for critical applications.

- Scalability for IoT : Enables the deployment of billions of connected devices without overwhelming centralized cloud infrastructure.

- Personalized Experiences : AI can tailor responses and services based on immediate local context.

Significant Challenges :

- Hardware Limitations : Edge devices often have limited processing power, memory, and battery life, requiring highly optimized AI models.

- Deployment and Management Complexity : Managing, updating, and securing thousands or millions of distributed edge devices with AI models can be daunting.

- Security at the Edge : Securing myriad distributed edge devices from physical tampering and cyberattacks is more challenging than securing a centralized data center.

- Interoperability : Ensuring seamless communication and data exchange between diverse edge devices, gateways, and cloud platforms.

- Cost of Edge Infrastructure : Initial investment in specialized edge hardware and infrastructure can be substantial.

- Data Synchronization : Maintaining data consistency and synchronizing relevant data between the edge and the cloud without compromising performance or privacy.

- Model Optimization : Training complex AI models in the cloud and then optimizing them to run efficiently on resource-constrained edge hardware requires specialized skills.

- Lack of Standardization : The edge ecosystem is still evolving, with a lack of universal standards for hardware, software, and communication protocols.

Addressing these challenges effectively requires a robust strategy for device management, security, and a deep understanding of AI model optimization. For more insights into optimizing AI at the edge, consider resources like Intel’s overview on Edge AI.

The Future Landscape of Edge AI

The trajectory of Edge Computing and AI at the Edge points towards even greater intelligence, autonomy, and ubiquity.

- Hyper-Converged Edge Architectures : Expect more integrated hardware/software solutions that combine compute, storage, and networking capabilities into compact, powerful edge devices.

- Federated Learning at the Edge : AI models will increasingly be trained collaboratively across distributed edge devices without centralizing raw data, enhancing privacy and security while improving model performance.

- AI-Powered Edge Orchestration : AI itself will be used to manage, optimize, and secure vast deployments of edge devices and AI models, automating tasks like resource allocation, anomaly detection, and predictive maintenance for the edge infrastructure.

- Edge-to-Cloud Continuum : The distinction between edge and cloud will blur further, with seamless workload migration and data flow between the two, managed by intelligent orchestration layers.

- Specialized Edge AI Chips : Development of purpose-built AI accelerators for edge devices will continue to advance, offering incredible power efficiency and processing capabilities for AI inference.

- 5G and Beyond : The rollout of 5G and future wireless technologies will provide the low-latency, high-bandwidth backbone necessary to fully unleash the potential of Edge Computing and AI at the Edge, enabling richer, more complex applications.

This ongoing evolution will make intelligent, real-time capabilities pervasive, transforming industries and personal experiences in profound ways.

Edge Computing and AI at the Edge represent a fundamental shift in how we process data and deploy artificial intelligence. By bringing computational power and AI inferencing closer to the source of data, these technologies address critical challenges related to latency, bandwidth, privacy, and reliability, unlocking a new era of real-time intelligence. While the journey presents complexities in management and security, the transformative benefits for industries like manufacturing, healthcare, and autonomous systems are undeniable. Embracing this paradigm is no longer optional; it is essential for organizations seeking to remain competitive, innovative, and resilient in a data-driven world.

Eager to delve deeper into the transformative power of emerging technologies like AI and edge computing? Explore more of our expert analyses and insights at jurnalin.