As Artificial Intelligence continues its exponential growth, permeating every facet of our lives, the critical importance of AI Governance and Ethics has become undeniable. From autonomous systems making life-or-death decisions to algorithms influencing financial opportunities and social interactions, the impact of AI is profound. Unchecked, this power risks amplifying biases, eroding privacy, and undermining trust. As of mid-2025, robust frameworks for AI governance and ethical guidelines are no longer theoretical discussions; they are urgent necessities for organizations, governments, and society to ensure AI development and deployment are responsible, fair, transparent, and aligned with human values.

What is AI Governance and Ethics?

To truly grasp the concept, it’s essential to define both “AI Governance” and “AI Ethics” and understand their synergistic relationship.

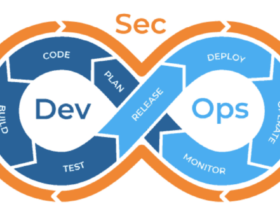

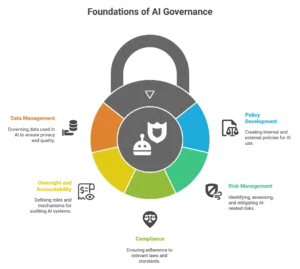

AI Governance refers to the systems, processes, and frameworks designed to manage the development, deployment, and use of AI. It’s about establishing clear rules, responsibilities, and oversight mechanisms to ensure AI technologies are developed and used safely, securely, and in compliance with laws and internal policies. Governance provides the practical structure for responsible AI.

Key aspects of AI Governance include:

- Policy Development: Creating internal and external policies for AI use.

- Risk Management: Identifying, assessing, and mitigating risks associated with AI (e.g., bias, security vulnerabilities, misuse).

- Compliance: Ensuring adherence to relevant laws, regulations, and industry standards.

- Oversight and Accountability: Defining roles, responsibilities, and mechanisms for auditing AI systems and holding individuals/organizations accountable.

- Data Management: Governing the data used to train and operate AI, ensuring privacy, quality, and ethical sourcing.

AI Ethics, on the other hand, deals with the moral principles and values that should guide the design, development, and use of AI. It asks fundamental questions about what constitutes “good” or “bad” AI behavior and seeks to embed human values into the technological fabric. Ethical considerations inform the principles upon which governance frameworks are built.

The synergy is clear: AI ethics provides the “what should be done” (the moral compass), while AI governance provides the “how it will be done” (the operational roadmap) to realize those ethical aspirations in practice.

Why is AI Governance and Ethics Crucial Now? Addressing the Risks of Unchecked AI

The rapid advancement and widespread adoption of AI technologies have highlighted several inherent risks that make robust AI Governance and Ethics absolutely vital. Without proper oversight, AI can lead to significant negative consequences for individuals, organizations, and society at large.

Here are the primary reasons why this domain is now critical :

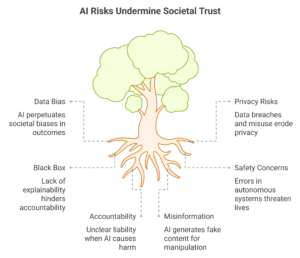

- Bias and Discrimination: AI systems learn from data. If training data reflects existing societal biases (e.g., racial, gender, socioeconomic), the AI will learn and perpetuate these biases, leading to discriminatory outcomes in areas like hiring, loan approvals, criminal justice, or healthcare.

- Privacy Violations: AI often relies on processing vast amounts of personal and sensitive data. Without strong governance, there’s a high risk of data breaches, unauthorized access, and the misuse of personal information, leading to privacy erosion.

- Lack of Transparency and Explainability (The “Black Box” Problem): Many advanced AI models (especially deep learning) are complex “black boxes,” making it difficult to understand how they arrive at a particular decision. This lack of explainability hinders accountability, auditing, and trust, particularly in high-stakes applications.

- Safety and Reliability Concerns: In autonomous systems (e.g., self-driving cars, medical devices), errors or unpredictable behavior from AI can have severe, even life-threatening, consequences. Ensuring the safety, robustness, and reliability of AI is paramount.

- Accountability Dilemmas: When an AI system causes harm, who is responsible? The developer, the deployer, the user? Clear governance frameworks are needed to define liability and accountability.

- Misinformation and Manipulation: Generative AI can produce highly realistic fake content (deepfakes, synthetic text) that can be used to spread misinformation, manipulate public opinion, or commit fraud, posing significant societal threats.

- Job Displacement and Economic Inequality: As AI automates tasks, concerns about widespread job displacement and the widening of economic disparities arise, requiring ethical considerations for workforce transition and social safety nets.

- Societal Trust and Acceptance: Ultimately, public trust is essential for AI adoption. A series of ethical failures or uncontrolled risks could erode public confidence, leading to resistance and hindering the beneficial development of AI.

The proactive establishment of AI Governance and Ethics frameworks aims to mitigate these risks, foster responsible innovation, and ensure that AI serves humanity’s best interests.

Key Principles of Ethical AI

Ethical AI is built upon a foundation of core principles designed to guide its development and deployment. While specific interpretations may vary, the following are widely accepted as cornerstones of responsible AI:

These principles serve as a moral compass, informing the design choices, data practices, and deployment strategies for AI technologies. For a comprehensive look at global AI ethics guidelines, reports from organizations like the OECD on AI principles provide valuable insights.

Emerging Global Regulatory Landscape

The increasing recognition of AI’s potential and risks has spurred governments worldwide to develop regulatory frameworks for AI Governance and Ethics. While still evolving, a clear trend towards comprehensive AI regulation is emerging.

Key Regulatory Initiatives:

- The European Union (EU AI Act): As of mid-2025, the EU AI Act is widely recognized as the world’s first comprehensive legal framework for AI. It adopts a risk-based approach, categorizing AI systems into different risk levels (unacceptable, high, limited, minimal) and imposing strict requirements on high-risk AI, including conformity assessments, human oversight, transparency obligations, and robust data governance. It significantly influences global standards.

- United States: The US approach has been more fragmented, relying on a mix of executive orders, voluntary frameworks, and sector-specific regulations. The Biden administration’s Executive Order on AI (issued in late 2023) set directives for federal agencies on AI safety, security, and responsible use. NIST (National Institute of Standards and Technology) has developed an AI Risk Management Framework, and various legislative proposals are under consideration, focusing on areas like data privacy, bias in algorithms, and copyright for generative AI.

- China: China has also been proactive, with regulations targeting specific AI applications, such as deep synthesis technology (deepfakes) and generative AI, focusing on content authenticity and adherence to core socialist values. Their approach balances innovation with social control.

- United Kingdom: The UK has adopted a pro-innovation approach, initially favoring sector-specific guidelines and existing regulations over a single, overarching AI law. However, discussions are ongoing about strengthening regulatory oversight as AI capabilities advance.

This diverse global landscape means that organizations deploying AI internationally must navigate a complex web of varying compliance requirements, making robust AI Governance and Ethics strategies essential for legal and ethical operation.

Implementing AI Governance: Challenges and Best Practices

Establishing effective AI Governance and Ethics within an organization is a complex undertaking, fraught with technical, organizational, and cultural challenges. However, the benefits of responsible AI far outweigh these hurdles.

Common Challenges :

- Lack of Clear Roles and Responsibilities: Unsurety about who owns AI governance (IT, legal, ethics committee?).

- Data Quality and Bias Detection: Identifying and mitigating bias in massive and often opaque datasets is technically difficult.

- Explainability Gap: Achieving transparency for complex AI models without sacrificing performance.

- Rapid Pace of AI Development: Regulations and internal policies struggle to keep up with new AI capabilities and risks.

- Skill Shortage: A lack of professionals with expertise in both AI technology and ethical/legal frameworks.

- Integration with Existing Systems: Embedding AI governance into existing MLOps, DevOps, and risk management processes.

- Cultural Resistance: Overcoming skepticism or resistance to adopting new ethical guidelines and oversight mechanisms.

Best Practices for Implementation:

- Establish a Dedicated AI Governance Framework:

- Cross-functional AI Ethics/Governance Committee: Include representatives from legal, IT, data science, product development, and ethics.

- Define AI Principles & Policies: Translate high-level ethical principles into actionable, internal policies and guidelines.

- Risk Assessment Methodology: Develop a systematic approach to identify, assess, and prioritize AI-specific risks (e.g., bias audits, security vulnerability assessments).

- Invest in Responsible AI Tooling:

- AI Observability Platforms: Tools to monitor AI model performance, detect drift, and identify potential biases in real-time.

- Explainable AI (XAI) Tools: Software that helps interpret model decisions, making “black box” AI more transparent.

- Data Governance Tools: Solutions for managing data quality, lineage, privacy, and access controls for AI training data.

- Prioritize Education and Training:

- Upskill Teams: Provide training for developers, data scientists, and product managers on ethical AI principles, responsible development practices, and governance frameworks.

- Company-wide Awareness: Educate all employees about the ethical implications of AI and their role in upholding responsible AI practices.

- Embrace Human-in-the-Loop Approaches:

- For high-stakes decisions, ensure human oversight, intervention points, and opportunities for human review and override.

- Foster a Culture of Responsibility:

- Integrate ethical considerations into the AI development lifecycle from conception to deployment and monitoring.

- Encourage open dialogue about AI risks and ethical dilemmas.

- Engage with Stakeholders:

- Seek input from diverse internal and external stakeholders, including user groups, ethicists, and legal experts, to ensure a broad perspective on AI’s impact.

- Stay abreast of evolving regulations by engaging with industry bodies and legal advisors.

Organizations like IBM have developed comprehensive frameworks for trustworthy AI, which can serve as a valuable resource for best practices. You can learn more about practical implementation strategies through IBM’s perspective on Building Trustworthy AI.

The Future of Responsible AI: Proactive and Adaptive

The journey toward responsible AI is continuous. The future of AI Governance and Ethics will be characterized by increasingly sophisticated tools, adaptive regulatory frameworks, and a deeper integration of ethical considerations into the very fabric of AI development. We can expect:

- AI-powered Governance Tools: AI itself will be used to help monitor, audit, and manage other AI systems for compliance and ethical adherence.

- Global Harmonization Efforts: While diverse regulations exist, there will likely be increasing efforts towards international collaboration and harmonization of AI standards to facilitate global innovation and trade.

- Focus on AI Explainability and Trust (XAI-T): Research will continue to advance not just explainability but also methods to measure and build trust in AI systems.

- Emphasis on Pre-Deployment Ethics: More emphasis will be placed on “ethics by design,” embedding ethical principles at the earliest stages of AI system conception and development, rather than as an afterthought.

- Broader Societal Dialogue: Continued public engagement and debate will shape the evolution of AI ethics, ensuring that technological progress remains aligned with societal values and democratic principles.

The rapid proliferation of Artificial Intelligence presents humanity with immense opportunities, but also profound responsibilities. The conscious and proactive establishment of robust AI Governance and Ethics frameworks is not merely a legal or compliance burden; it is an imperative for building trust, fostering sustainable innovation, and ensuring that AI serves as a force for good. By embracing principles of fairness, transparency, accountability, and human oversight, organizations and governments can navigate the complexities of the AI era responsibly, unlocking its transformative potential while safeguarding societal values.

Looking for more in-depth analyses on critical tech topics like AI ethics and digital transformation? Visit jurnalin for expert insights and the latest news.